A little dip into CI with Github Actions [Part 1]

A quick initiation or recap into Continuous Integration, the CI of CI/CD. CI is the first part of a work style to get constant feedback that what you’re doing is working.

Integration is the process of importing new code - patches, upgrades, a new feature - to an existing instance of the product. In code production terms, that’s merging a feature branch to the main branch.

For development, CI provides crucial insight to the state of the current code state. As you work, tools in the background run some commands and build out some or all of a working environment, mimicking how the production build would behave, and giving an up-to-date artefact to run tests against to see if you’re there yet.

The basic starting point of this whole thing is that before CI, we had to make do with running scripts to produce the same result - low-level automation stuff. The beauty of the pipeline is that it can be done as much or as little as you want.

In this post I’m going to go into slightly more detail about my CI pipeline, either for your interest, learning, and failing all else, I needed more content and I haven’t talked about it yet. This is specific to my blog repository pipeline, but it’s generic enough that it can be extrapolated from.

The Goal⌗

Hold up though, what am I trying to achieve? My blog site uses the Hugo tool to generate static files that I can drop into to a webserver and run somewhere (more on this another time). I like Nginx. I don’t really know why I like it as much, but when I was a wee sprog I was told it was a Magical Asynchronous Webserver written by crazy Russians (stereotypes aside, I wonder if Russian PFYs are told that stuff gets written by crazy Englishmen? Who knows) that was magnitudes more efficient and faster than Apache, the culturally-appropriated-named Apache webserver coming in close second for market share. Mostly, it seems to be personal flavour that splits opinion.

Anyway, having gotten completely off-topic, I use nginx for my Hugo build because I have half an idea of how the webserver config works, which is three-quarters more of an idea than I understand Apache. I use Kubernetes to run containers, and so I build container images to run on Kubernetes. So, what I need out of my build process is a container image that I can run - locally for testing or in Kubernetes for “production” (quote marks because it’s only technically prod, but to be very clear it’s best-effort)

So, onto the pipeline⌗

Firstly, the provider. There are many. GitLab, GitHub, CircleCI, Gitea, CodeShip, Travis, Drone… AWS has something I’ve never cared enough to look at. The point is there’s a lot of options available, both hosted by cloud providers and self-hosted options.

I have my git repositories in Github, for a couple of reasons which aren’t strong enough to withstand some scrutiny other than “my servers are best-effort”, but it does provide some benefits, among which are access to a small number of runtime-minutes of Github Actions, which is their CI offering. It’s reasonably new, and by that I mean it’s been developed over the last handful of years and taken off in popular use much more recently.

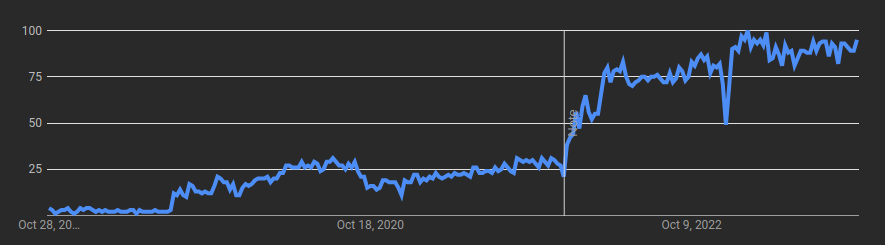

Here’s a Google Trends of search activity of for Github Actions. At no point does the scale make any sense, don’t ask me what it is.

Onto the setup.

Most CI providers take some or more of the config to run the pipeline in a file stored in the repository itself. Github already uses the directory .github to store Github-related stuff in, and it expanded this by adding a subdirectory workflows in which to store Actions config files.

Third, the syntax.

Building from the concept that all we’re really doing is running an elaborate script, Actions has the concept of Jobs which is roughly analagous to a single script. A workflow might have several jobs, in the same way that a script might perform a task and call another script. But there’s some basics to the framework: it’s written in YAML (infamously, “YAML Ain’t Markup Language”, but it’s just another way to make value-based config human-legible); and some minimum flags are required, but many more are available to enhance the look and feel of the action.

For starters, a minimum spec workflow has the on object and the jobs object.

On [Activity]⌗

On is the section where the trigger(s) is/are defined. Some of the crowd favourites:

- push (trigger on every git push)

- pull_request (trigger for pull requests)

- workflow_dispatch (gives a button in the web UI for those inclined towards the clicky clicky)

- there’s a more exhaustive list in the docs here

Within these options you can also filter to include or exclude branches and directory paths. Let’s say you only want to run a certain workflow against branches named with web/ at the beginning, and also your main branch. You can achieve this like so:

on:

push:

branches:

- main

- web/**

Equally if you wanted to exclude those branches, you’d use branches-ignore instead of branches.

Similarly so for changes within a directory, in case you didn’t want to rebuild the entire codebase if you’re just updating the docs or the readme file, you could use:

on:

push:

paths-ignore:

- docs/**

- README.md

This goes hand in hand with the antoymic paths which only trigger when changes occur in matching directories.

Jobs⌗

The heavy lifter of the workflow file, jobs is what defines the “scripts” to run. Each job is nested under a unique (to this workflow) name:

jobs:

my-job:

name: Test job

This makes a job called my-name, and gives it some metadata: in a GUI, it’ll show up as the job “Test job”. It’s not necessary, but I feel that naming jobs and alphabetising lists are all that separates humanity from beasts of the forest.

runs-on is the next value to fill in, and it’s an important one: it’s the runner, or the place that the code will actually execute.

Github offer a solution hosted by themselves: initially free, paid for over a certain amount of usage (unless your repo is public, and then you’re deemed to be working for the benefit of the open-source community and get free builds forever (for now)). There are a few options, but to use Github’s Linux runner we can give it the value ubuntu-latest. Here are the potential values available, and they all come with their quirks features and baked-in tools.

timeout-minutes is technically optional, but I did once see a build that had been running for over 3 months.

steps is the next value to fill, and you can approach this in as many ways as you care to name. The first is that each step can be treated as an intentional command, named and given an ID, intentionally shaped.

The other is to just dump an entire existing release Bash script into a run command and have it be done with.

I’m much more of the former thinking, especially as when it doesn’t work, it’s much nicer to be able to break down exactly where the process falls over, which is something that might not happen with an entire embedded script. So, I use multiple steps to get some granularity in the job.

Here’s some steps in my build job with a brief explanation of what they do.

- name: Checkout code

uses: actions/checkout@v4

This is an important one. This gets the code from the repo and makes a local copy of it. It does this by using an inferred Github Secret, which is a read-only API token that grants access to that repo only.\

- name: Get timestamp

id: tag

run: |

timestamp="$(date +%s)"

echo "timestamp=$timestamp">> $GITHUB_OUTPUT

This makes a variable out of the Epoch format of the date function. It’s handy because it lets me tag an image with this exact second and using that number really filters down my list of images to one potential match.

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

with:

driver-opts: image=moby/buildkit:master

Want to build a Dockerfile? Probably going to need the ability to run docker builds. Docker Buildx is useful for multi-architecture builds, which aren’t essential but one day I’ll get around to running an ARM node or three.

- name: Login to GitHub Container Registry

uses: docker/login-action@v3

with:

registry: ghcr.io

username: ${{ github.actor }}

password: ${{ secrets.GHCR_TOKEN }}

Want to save the image for later? You’ll need a container registry, and that probably means logging into it. The values should be fairly self-explanatory.

- name: Build and push

uses: docker/build-push-action@v5

with:

push: true

context: ${{ github.workspace }}/

file: ${{ github.workspace }}/Dockerfile

tags: ${{ secrets.GHCR_REGISTRY }}/blog:dev-${{ steps.tag.outputs.timestamp }}

The final step in this job: build the image from the Dockerfile. I’ve specified the location of the Dockerfile, as well as the context for the Docker build process to run, and I’m tagging the image as blog:dev-<timestamp>.

Roundup⌗

I’ve now built a dev-tagged image that I can use in tests, run locally, run in a cluster - I could do blue/green deployments - or whatever else I wanted with it.

When you’re older, I’ll tell you what happens with the dev image to make it grow up to be a prod image.